Simplified infrastructure with Istio (yes, really!)

TLDR; Istio automates manual processes, provides security built in and enables team autonomy.

Istio is known by many names: Service Mesh, API Gateway, Nightmare-Inducing-Software.

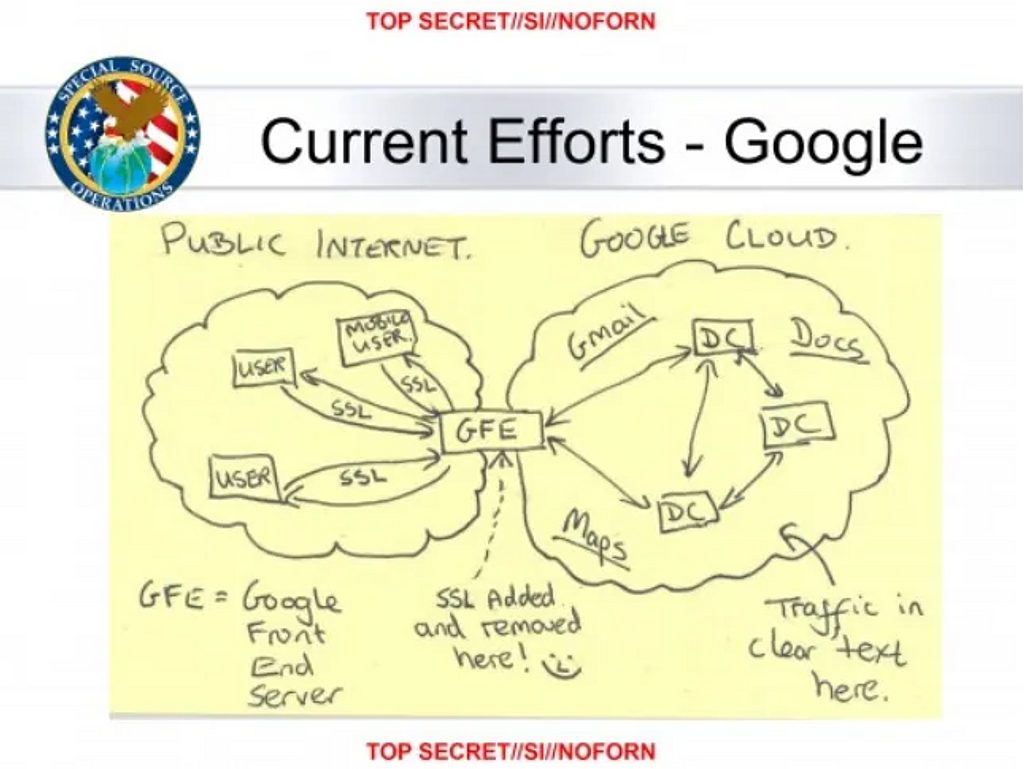

This brings some confusion as to what Istio actually does. And what does it do? It configures reverse proxies (specifically Envoy). What is a reverse proxy you say? It’s a webserver. Configured to proxy web traffic.

This pattern is referred to as the control plane (Istio) and data plane (Envoy) - as it’s also called in Software Defined Networking (SDN).

# Why a dedicated tool to configure “just” a web-server?

At a certain point, scaling your infrastructure vertically is no longer a viable option (although much later than you might think!), and you start scaling horizontally. Scaling horizontally can be managed using configuration management tooling (Puppet/Chef/Ansible/SaltStack and the like), which is really good at handling deployments with static configuration (like .conf or .cer files in a file system combined with SIGHUP). This may work well for a long time, but it’s not built for the dynamic configuration changes needed to leverage cloud native and the elasticity of cloud. Also, they are generic in nature, meaning all the complexities of networking, certificates, http and grpc are not really addressed.

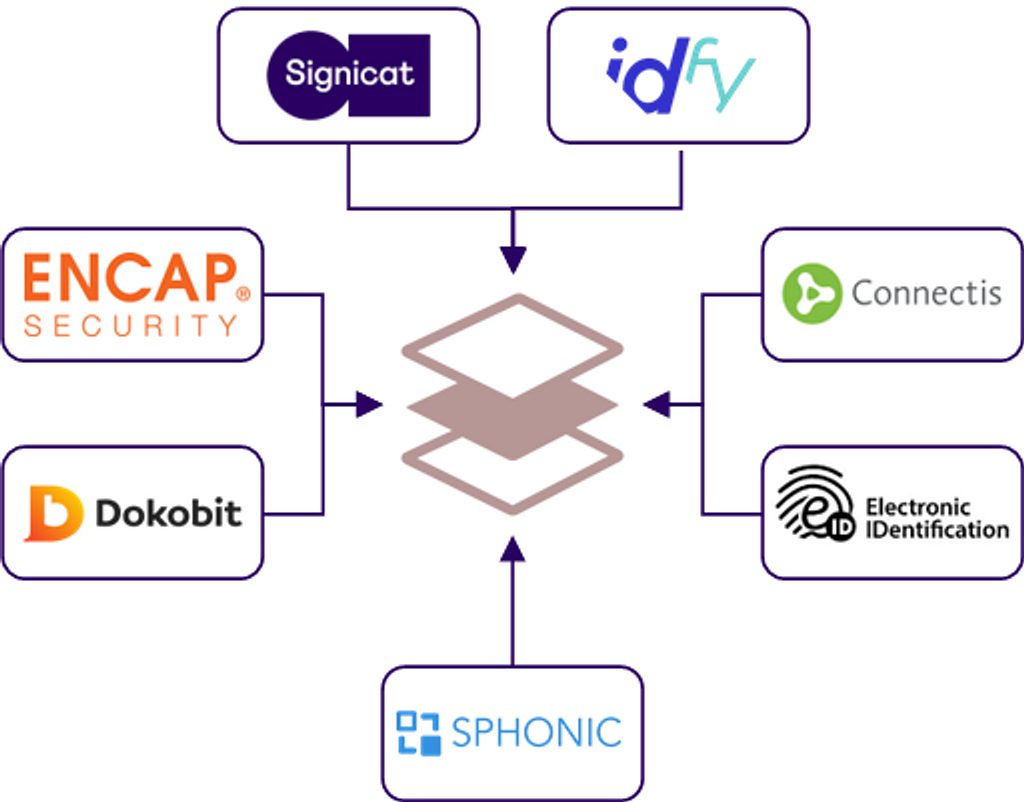

# Signicats problem domain

# Microservice architecture → Kubernetes

Signicat is currently breaking up existing monoliths and consolidates products with best of breed selection across implementations in several programming languages. Using containers and Kubernetes, we can add and scale services independently without manual coordination. VMs with multiple products or provisioning of dedicated VMs for each service is no longer centrally managed. Additionally, Signicat use api.signicat.com as the main hostname for customers to connect to our API products, in order to unify our integration point.

# Scale-up → growing number of teams and resource requirements increase exponentially

Software ownership is placed with the implementing teams. This is because complexity has skyrocketed with:

"ephemeral and dynamic, far-flung and loosely coupled, partitioned, sharded, distributed and replicated, containers, schedulers, service registries, polyglot persistent strategies, auto scaled, redundant failovers and emergent behaviors" – Charity Majors, 2022

Signicat needs to ensure ownership of products within each team. This requires team autonomy. Team autonomy means teams need sufficient access to manage their deployment on their own. Including HTTP traffic routing, and preferably traffic shaping (canary releases), tuning of rate-limits and observability of traffic. Providing team isolation (limited blast radius) while at the same time granting required flexibility is particularly important.

Signicat also needs to have sufficient capacity to meet an ever growing traffic. Capacity pre-planning works if there is a central team with full insights into all product and feature launches, marketing campaigns, existing customer annual usage patterns and new customers usage expectations. With a growing product portfolio and number of teams, this typically include a significant amount of capacity buffer. Being a scale-up, cost discipline is extremely important in order to be successful. Being able to adapt capacity quickly, enables reduced overall cost without increasing risk.

# White labeling and customers in regulated industries

Signicat provides white labeling of our products (of which the Signicat brand is not directly visible for the end user), and we integrate with a lot of regulated vendors which mandate various compliance schemes.

One example is the login page of a bank. You may go to login.somebank.org, with only SomeBank logos and visible domain, while it’s pointing to Signicat through CNAME. This Signicat feature has multiple relevant platform requirements:

- Web-server security is always important, but within the regulated space Signicat operate, some very specific (and audited) requirements include:

- Predefined TLS ciphers and required TLS version support. Including mutually exclusive requirements between third party vendors (!)

- Encryption keys stored in Hardware Security Module (HSM)

- HTTP Strict Transport Security (HSTS)

- Strict compliance limits on Certificate Authority (CA) for TLS for specific products

- Specific certificate revocation implementation (OCSP Stapling)

- Various customer requirements

- IP Whitelisting (in and out)

- Adherence to customer CAA records

- Encryption of all network traffic

- To enable full self-service, we need infrastructure integration

- Configuration for reverse proxy listening for hostnames

- SSL certificate handling

- Issuing and monitoring

- Custom certificate upload

- Reverse proxy certificate distribution and configuration

# Mergers and acquisitions

Signicat contain products and technology from several companies, which consists of very different architectures, programming languages and frameworks.

This includes Java, .Net, PHP, Kotlin, Scala++. Even if the programming language is the same as within other groups, the frameworks and coding patterns used are in some cases so different, that enabling shared libraries becomes a real challenge. And security in an interconnected microservice architecture is only as strong as the weakest link in the chain. Adding security at the platform layer is therefore strongly preferred.

# Existing complexity and the way forward

Signicat has been around for longer than containers, cloud native or even elastic cloud. And we have been tackling many of the same challenges with the tools common at the time of implementation. Example of an existing implementation include:

- API Gateway implemented using Apache as reverse proxy

- Apache itself is compiled by Signicat, originally to support specific cipher requirements/different SSL version than upstream

- Custom build script containing dynamic ModSecurity rules bundled into the apache binary build script

- Configuration (tls, hostnames, upstreams) through files and SIGHUP (and in some cases restart)

- HAProxy designed in as an internal load balancer (eventually not shipped) between API Gateway (Apache) and internal web servers

- Configured through configuration files and SIGHUP

- Lacking encryption at the network layer

- Mitigated by rigid network firewall rules both internal and external. Anything not explicitly allowed by the operations team is blocked

- Additional security with OS level proxy for outgoing connections (Squid)

- Central internal management APIs without authentication

- Limited visibility into network flows

- Mitigated by monolithing architecture, reducing the need

- Black hole issues (such as firewall blocking) hard to pinpoint and distinguish from other problems

- All production servers centrally managed. No developer access (no team autonomy)

- Configuration management using Puppet agent (client) using control repo pushed to Puppet master. Client operation has 5 minute convergence time (5 minute check interval + catalog compilation)

- TLS certificates added into Puppet git repo, with private key as encrypted string in git

- All certificates explicitly added (certbot, or manually ordered and renewed)

- Active/passive deployment schedule keeping >50% capacity free at all times with manual reconfiguration

- Connections not dropped within a given time, forcefully terminated through webserver restart. On every upgrade

- SaltStack minions installed to enable manual remote task execution out of band (such as forcing Puppet client to re-read manifests)

- Adding capacity requires provisioning a new node, complete with Puppet configuration defined in code

- Manually configuring new node into load balancer and/or reverse proxy (API Gateway) Puppet control repo

- Partly mitigated by introducing HashiCorp Consul as service catalogue integrated with HAProxy

- Manually configuring new node into load balancer and/or reverse proxy (API Gateway) Puppet control repo

# Design principles

To tackle Signicats problem domain in the new paradigm, Signicat came up with a few overall key design principles to help guide architecture decitions and various implementations of a solution:

- Ease of use for "service owners" (at the expense of "platform owners" if needed)

- Secure by default (but with a strong preference towards low complexity when possible)

- Industry standard (at the expense of "better" solutions not widely adopted if needed)

# How Istio helps us

Istio provides a consistent API for north-south traffic and east-west traffic. This covers a lot of functionality. Comparable functionality to Istio/Envoy on Kubernetes in legacy exist, but contains a lot of moving parts:

- Data plane: Apache (Signicat compiled), Squid, HAProxy and firewall ACLs

- Control plane: Puppet, SaltStack, Consul, manual certificate provisioning and scaling, manual external load balancer configuration

Yet these legacy components and processes provide insufficient functionality in a modern cloud ecosystem.

Additionally, Istio enables uniform security at the platform layer. This is extremely useful in an audit context, because it is easier to reason about than various individual components in different products.

# API Gateway (north-south traffic)

With an elastic microservice architecture, it means that load balancer and ingress needs to be dynamically configured to send traffic to any services or change in scaling dynamically. But doesn’t Kubernetes already fix all of that?

Signicat is using api.signicat.com as the main hostname for customers to connect to our API products. With the monolith breakup, this is now split into multiple services providing the different functionality. The native abstraction of receiving traffic from the outside into Kubernetes Services is the concept of Ingress. This takes care of north-south traffic (outside<->inside traffic). This defines what hostname and port to listen for and basic TLS configurations. Now the Ingress definition alone does nothing. You need to install an Ingress Controller (E.G Istio or nginx) in your cluster which includes a reverse proxy and a Kubernetes Operator. This Controller translates Ingress definition into configuration for the reverse proxy and usually also include configuring a load balancer.

Options for the Ingress object is very limited. Want to specify specific TLS ciphers? Not possible. Route on anything but path (E.G header)? Nope. Check authorization before forwarding? Rate-limits? Not a chance.

What about allowing teams to modify some settings, but not all? No.

This is where an API Gateway implementation like Istio comes into play. Istio Ingress (API Gateway) provides:

- Gateway resource for configuring layer 4-6 load balancing properties such as ports to expose and TLS settings

- In addition to Ingress Gateway, Istio also support Egress Gateway to limit outgoing traffic

- VirtualService resource for configuring application-layer traffic routing (layer 7), bound to Gateway

- Istio support route delegation, or sub-routes (in a similar manner as DNS subdomains). This enables team granularity and ownership over specific paths

- Istio provides a Secret Discovery Service (SDS), which detects changes to Kubernetes Secrets holding TLS certificate data and updates correspoding reverse proxies receiving ingress traffic

Signicat use these features extensively.

# Example

White label bank login use-case:

- Terminate TLS connections

- Specific TLS ciphers and TLS versions as required (fills requirement 1a, replaces legacy: Apache)

- Configure customer domains and corresponding certificates dynamically (fills requirements 1c,1d,2c, replaces legacy: manual certificate deployment, manual SaltStack action/5 minute wait time to updates in a second)

- Self-service domain and certificate management integration through Kubernetes API and RBAC (fills requirements 3a,3b, replaces legacy: manual certificate ordering

- Scales as an independent service

- Scales using Kubernetes like any other service (including rolling updates). (replaces legacy: manual provisioning of new ingress nodes and load balancer config updates, HAProxy internal load balancer, active/passive deployment, SaltStack for triggering out of band updates)

- Team autonomy, and responsibility boundary between platform owners and service owners

- The VirtualService separation and corresponding delegation (replaces legacy: Apache VirtualHost, central management, HAProxy internal load balancer)

# Service mesh (east-west traffic)

Another key featureset, and what Istio really is known for, is features related to so called “internal traffic”. This is usually referred to as a service mesh. There’s three main feature categories:

- Reliability features. Request retries, timeouts, canaries (traffic splitting/shifting), etc.

- Observability features. Aggregation of success rates, latencies, and request volumes for each service, or individual routes; drawing of service topology maps; etc.

- Security features. Mutual TLS, access control, etc.

With the large scale move to microservices, the observability features has proven extremely useful. Especially in incident scenarios. Having a uniform view across all services enable faster debugging and creation of universally valuable dashboards (such as RED metrics).

With mutual TLS, we are also able to address the privacy/encryption concern related to internal traffic uniformly, and at the platform layer (as opposed to various implementations in different languages and frameworks).

# Way of working

Istio is an enabler for Signicats desired way or working. Istio allows for team isolation and autonomy at the API Gateway and mesh, and provides uniform platform solutions to common problems (Ref. Design Principle #1 and #2). With uniform security solutions, it becomes very easy to describe our security in an audit context. It also has the added benefit that we can confidently boast that for some classes of security issues, we’re in good shape due to being able to adress them uniformly.

The alternative, without sacrificing team autonomy, would be relying on libraries and frameworks across all our programming languages to implement overlapping functionality. This is doable, but in particular with security features, it’s only as secure as the weakest link. This is also the reason why we chose to leverage Open Policy Agent (OPA) as our primary authorization scheme, to ensure consistency around security.

With Istios large industry footprint, we also have great ecosystem support. For Signicat, this means certificate automation with cert-manager, canary releases with ArgoCD, OPA based authorizations, Chaos engineering testing and routing with feature flagging.

Finally, Istio enables us to bridge the gap of the adjacent api gateway and service mesh (it’s just configuring reverse proxies after all!).

# A note on complexity

Istio has been touted as being too complex. Part of this is caused by the rich features available while providing consistent API for north-south traffic and east-west traffic. Additionally, until Istio 1.6, there where three separate binaries to configure and deploy. Since 1.6, Istio has gotten a lot simpler to operate and deploy with a much greater focus on simplicity from the project itself.

The components which Istio interfaces with has in some cases extreme complexities. Distributed systems are complex by nature. Abstractions help with managing complexities, but do not remove the underlying complexity. Kubernetes is complex. Webservers are complex. Networking is complex. Security is complex. Internal Development Platforms are complex. Modern software development is complex!

Even though Istio simplifies a lot, it is ultimately only an abstraction. In smaller organizations, you generally need less abstractions. At scale, this changes.

Ultimately, complexity is the cost of scale.

# Future

Looking forward Kubernetes has recognized the need provide vendor neutrality also on API Gateways and adjacent technology. The project has launched a new class of Custom Resource Definitions (CRDs) called Kubernetes Gateway API. This API advanced to beta status summer of 2022 and has started getting traction in the industry (including Istio). One notable missing area is support for service to service traffic (east<->west traffic) or service mesh. This is however also being worked on. With an officially backed API definition, we are likely to see a rise of competition in this space and hopefully interchangeable mature managed solutions from hosting providers. Ideally service meshes should simply fade out of sight, and stay hidden behind Kubernetes. Will the future include Istio? Probably. But the battle of the service meshes is not over!